I am a technologist (data scientist) by day and a humanist (writer) by night. I develop natural language processing and human-AI collaboration systems, from customer journey and product recommendation to search engine and chatbot, in the Business Intelligence Development Department at Taishin International Bank, Taiwan. Our machine learning models have boosted customer conversion rate by 2 to 5x. My academic background is in computer science and machine learning, with research experience in Human-Computer Interaction (HCI) - spanning across cross-device interaction, proxemic interaction, and accessibility - at St Andrews (undergraduate), UCL (masters), and Lancaster (PhD) in the UK as well as in Taiwan. I also spent one year as an English teaching assistant in Hualien, Taiwan. My personal interests are in human-centered computing, humanistic psychology, and education. I read and write about technology and life stories, including biographies, life challenges, and self-actualization.

Life Events

Writing

A selected collection of my writing pieces:

Work

A selected collection of my work projects:

|

2020. Taishin Data Team. Role: Full-Stack Development and Data Engineering. Customer Journey. |

|

|

A data infrastructure for tracking customer journey, managing and serving machine learning features, and predicting customer actions. Its technology stack includes Hadoop and Kafka. |

|

|

2019. Taishin Data Team. Role: Full-Stack Development and Data Engineering. Hybrid Data Search Engine. |

|

|

A search engine and analytics platform for unstructured big data. Its technology stack includes Elasticsearch, Docker, and Flask. |

|

Research

A selected collection of my research projects:

|

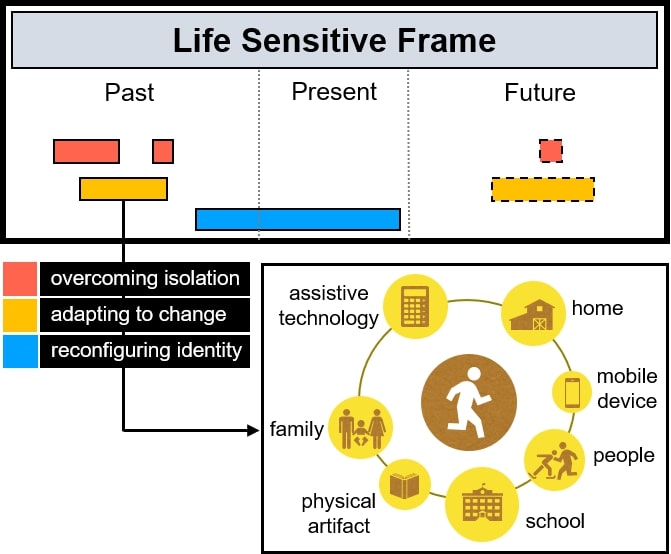

2019. Ching Su and Chi-Jui Wu. Weaving Human Experiences: Life Challenges in People with Visual Impairments. Unpublished manuscript. |

|

|

A growing interest within HCI is in understanding how people with visual impairments' disability emerges from interactions in situated, sociotechnical contexts. However, much of this work focuses on the accessibility experience in specific moments. In contrast, a person's whole life experience and life challenges have received little attention. We seek to weave the threads of accessibility research on diverse human experiences by exploring how technology, society, and culture jointly influence a person's growth throughout their lives. Human life is always changing, and our values and identity are reflected in our life stories. Through life story interviews with ten participants in Taiwan, we discuss three life challenges to personal growth in people with visual impairments and how technologies mediate the development of these life challenges. Finally, we introduce the life sensitive frame to support people with visual impairments as they grow and tackle their life challenges. |

|

2019. Frederik Brudy, Christian Holz, Roman Rädle, Chi-Jui Wu, Steven Houben, Clemens Klokmose, and Nicolai Marquardt. Cross-Device Taxonomy: Survey, Opportunities and Challenges of Interactions Spanning Across Multiple Devices. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI'19). |

|

|

Designing interfaces or applications that move beyond the bounds of a single device screen enables new ways to engage with digital content. Research addressing the opportunities and challenges of interactions with multiple devices in concert is of continued focus in HCI research. To inform the future research agenda of this field, we contribute an analysis and taxonomy of a corpus of 510 papers in the cross-device computing domain. For both new and experienced researchers in the field we provide: an overview, historic trends and unified terminology of cross-device research; discussion of major and under-explored application areas; mapping of enabling technologies; synthesis of key interaction techniques spanning across multiple devices; and review of common evaluation strategies. We close with a discussion of open issues. Our taxonomy aims to create a unified terminology and common understanding for researchers in order to facilitate and stimulate future cross-device research. |

|

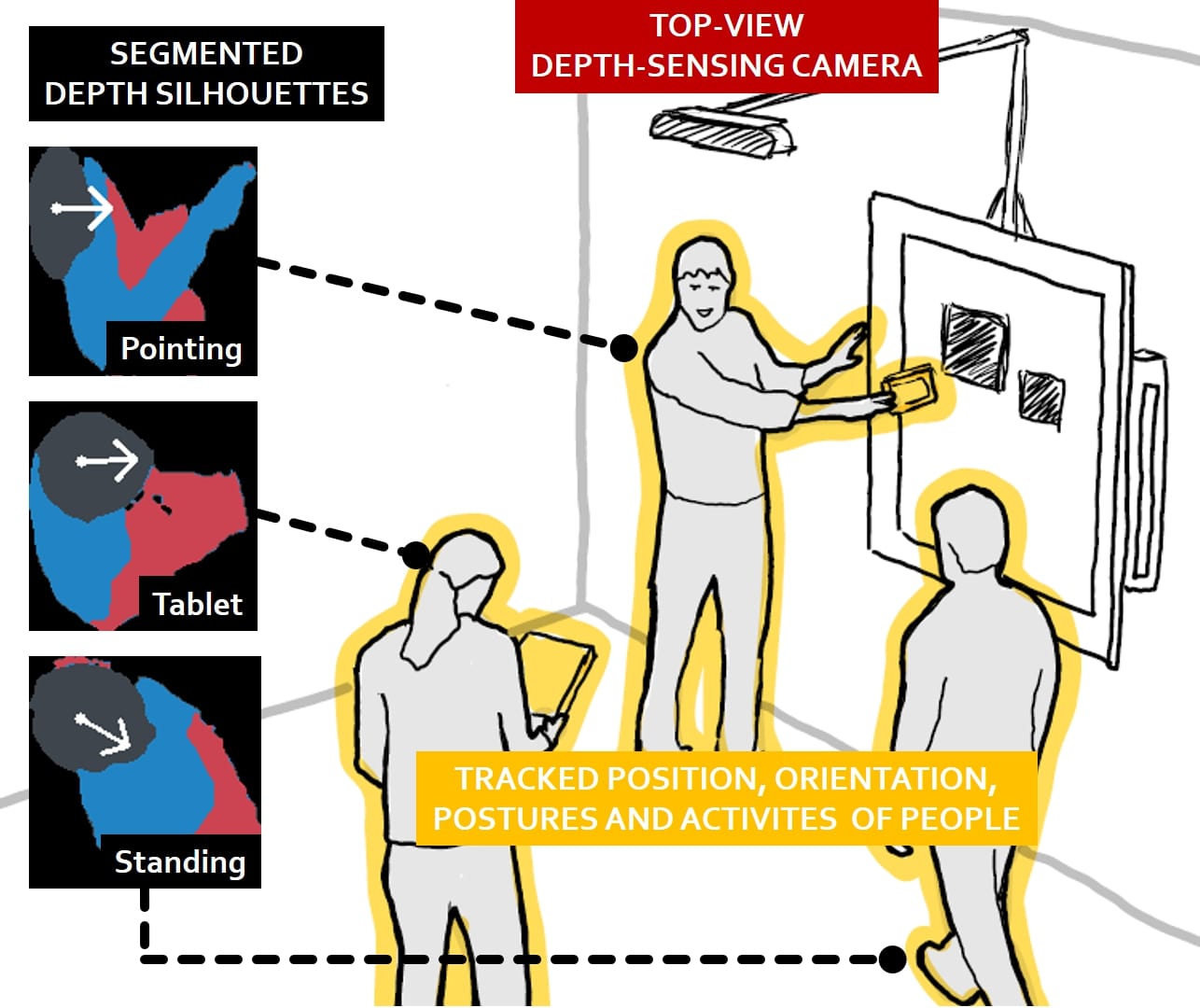

2017. Chi-Jui Wu, Steven Houben, and Nicolai Marquardt. EagleSense: Tracking People and Devices in Interactive Spaces using Real-Time Top-View Depth-Sensing. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI'17). |

|

|

Real-time tracking of people's location, orientation and activities is increasingly important for designing novel ubiquitous computing applications. Top-view camera-based tracking avoids occlusion when tracking people while collaborating, but often requires complex tracking systems and advanced computer vision algorithms. To facilitate the prototyping of ubiquitous computing applications for interactive spaces, we developed EagleSense, a real-time human posture and activity recognition system with a single top-view depth-sensing camera. We contribute our novel algorithm and processing pipeline, including details for calculating silhouette-extremities features and applying gradient tree boosting classifiers for activity recognition optimized for top-view depth sensing. EagleSense provides easy access to the real-time tracking data and includes tools for facilitating the integration into custom applications. We report the results of a technical evaluation with 12 participants and demonstrate the capabilities of EagleSense with application case studies. |

|

2017. Chi-Jui Wu, Aaron Quigley, and David Harris-Birtill. Out of Sight: A Toolkit for Tracking Occluded Human Joint Positions. In Personal and Ubiquitous Computing (PUC'17). |

|

|

Real-time identification and tracking of the joint positions of people can be achieved with off-the-shelf sensing technologies such as the Microsoft Kinect, or other camera-based systems with computer vision. However, tracking is constrained by the system’s field of view of people. When a person is occluded from the camera view, their position can no longer be followed. Out of Sight addresses the occlusion problem in depth-sensing tracking systems. Our new tracking infrastructure provides human skeleton joint positions during occlusion, by combining the field of view of multiple Kinects using geometric calibration and affine transformation. We verified the technique’s accuracy through a system evaluation consisting of 20 participants in stationary position and in motion, with two Kinects positioned parallel, 45°, and 90° apart. Results show that our skeleton matching is accurate to within 16.1 cm (s.d. = 5.8 cm), which is within a person’s personal space. In a realistic scenario study, groups of two people quickly occlude each other, and occlusion is resolved for 85% of the participants. A RESTful API was developed to allow distributed access of occlusion-free skeleton joint positions. As a further contribution, we provide the system as open source. |

Chi-Jui Wu 吳啟瑞 | Last Updated: 2020/02/09